What is RunwayML?

- Generate video from images and text prompts

- Add camera movement like pans, zooms, and tracking shots

- Extend short video clips into longer videos

- Add special effects, slow motion, remove background and other objects.

Step 1: Create a Shot List

| Scene Number | Description |

| 1 | A big explosion |

| 2 | Close up of a Rock flying by the camera |

| 3 | Rock moving away from the camera |

| 4 | An extreme wide shot of a rock entering a fire nebula |

Create Key Scene Images with Midjourney

- Start with simple prompts first, based on the descriptions of the scenes that we created. Then, start adding complexity, like camera or film utilized, focal length, and other technical aspects.

Prompt: a fire nebula explosion centered, low key lighting, shot on arri alexa mini --ar 16:9

Prompt: a fire nebula explosion centered, low key lighting, shot on arri alexa mini --ar 16:9

- Use Bing's AI (or ChatGPT or any other tool) to optimize your prompts and fill them with details. Super useful when you're struggling to get a specific shot.

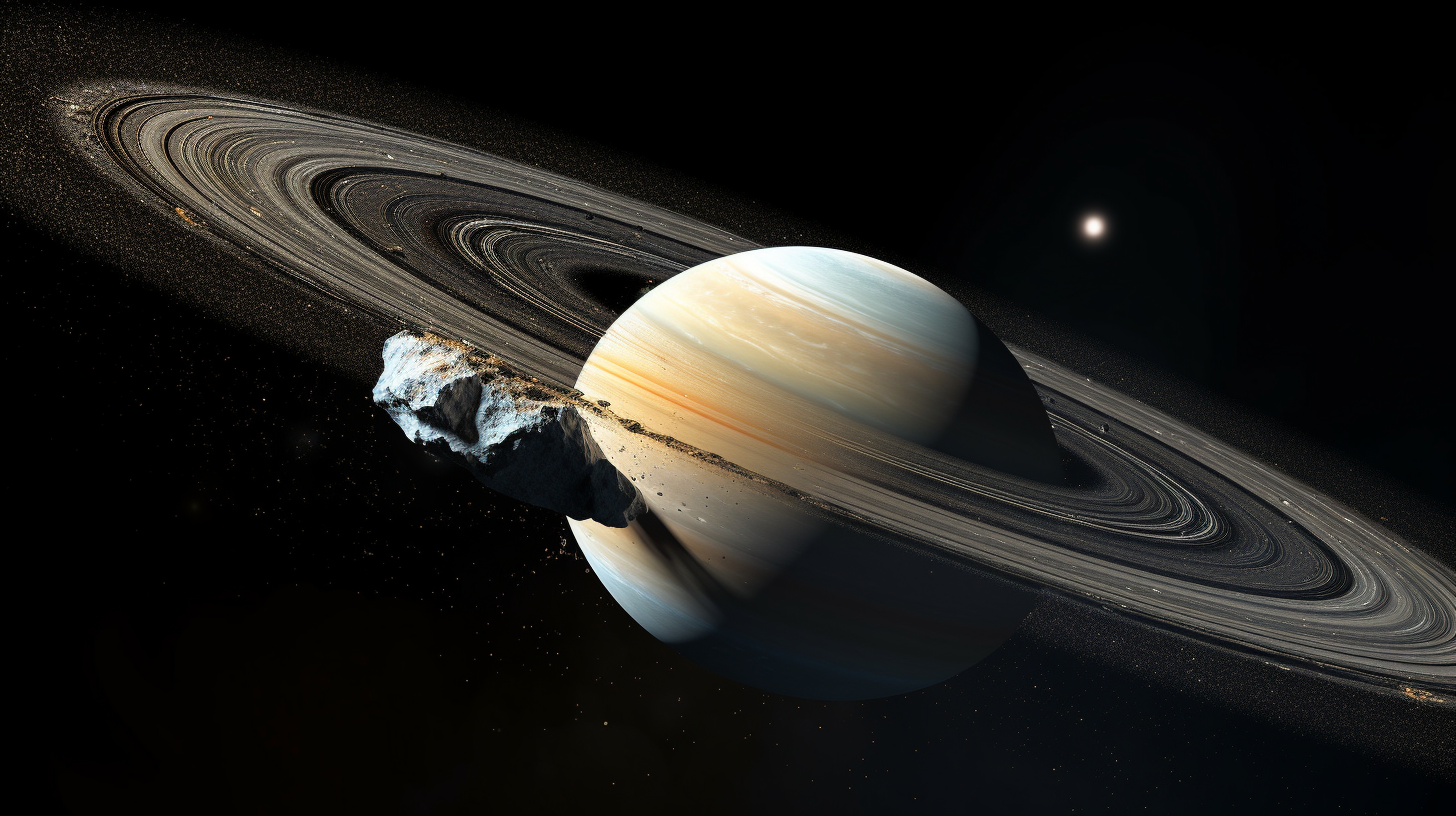

Prompt: A titanium rock falling towards Saturn’s rings, from a top view. The rock is shiny and metallic, with some scratches and dents. The rings are composed of ice and dust particles, with some gaps and variations in thickness. Saturn’s atmosphere is visible in the background, with swirling clouds and storms. The image shows the rock’s trajectory and speed as it approaches the rings. Saturn is slightly off-center in the image, and the rock is closer to the camera than Saturn. The image is rendered in high resolution and has realistic shadows and reflections --ar 16:9 --chaos 10

Prompt: A titanium rock falling towards Saturn’s rings, from a top view. The rock is shiny and metallic, with some scratches and dents. The rings are composed of ice and dust particles, with some gaps and variations in thickness. Saturn’s atmosphere is visible in the background, with swirling clouds and storms. The image shows the rock’s trajectory and speed as it approaches the rings. Saturn is slightly off-center in the image, and the rock is closer to the camera than Saturn. The image is rendered in high resolution and has realistic shadows and reflections --ar 16:9 --chaos 10

Pro tip: Ensure Visual Consistency

As you start generating your images, you'll quickly see that some results may look different in terms of style, lighting, composition, and other aspects.

This lack of consistency is not ideal for video. In most cases, it's important for the scenes to have a cohesive visual look, allowing them to flow seamlessly and showcase a progression of action.

Here's how you can ensure visual consistency in Midjourney:

- Use the same "seed" number for similar styled images. The seed is like the starting point from which Midjourney starts generating an image. If you use the same seed for 2 generations, you will have a higher chance that they look similar to each other.

- How: After you generate an image you like, click on the envelope reaction emoji in Discord. This will give you the seed for that image. Then use that number in your prompt by adding --seed YourSeedNumber at the end of the prompt

- Keep the same aesthetic descriptions. If you find an aesthetic description or style that you like, say "Kodak Film 2383", "Unreal Engine", "Futuristic", make sure to keep it consistent for the different images within the same sequence.

- Use images as an input. You can use reference images, or previous images that you've generated as input for your new generations. This will maximize the likelihood that your new image looks similar to the reference one.

- How: Upload an image to discord. Then right click on the image and click "Copy image address". Then use that link as the first thing in your prompt, right after /imagine.

Prompt: https://s.mj.run/sb0QvZbNl3s side of a titanium iphone, extreme close up shot :: Sparks in the background. Cinematic product shot --ar 16:9

Prompt: https://s.mj.run/sb0QvZbNl3s side of a titanium iphone, extreme close up shot :: Sparks in the background. Cinematic product shot --ar 16:9

Animate Scenes with RunwayML

- Specify camera movements like pans and zooms to make scenes more dynamic. You can easily do this from the "Advanced Camera Control setting".

- Keep your prompts simple, and modify the "speed" slider to add more energy.

- You can extend your clips up to 16 seconds if needed

- Download your best clips as you generate them, to keep proper track of them.

Edit Clips into a Full Video

- Sequence clips to match the shot list.

- Add the reference video or soundtrack to help you time the cuts

- Take advantage of CapCut's library of transitions to add more energy to the video

Key Things We Learned Using Runway

- Runway creates slow, cinematic movement

- The AI tends to generate very slow and subtle motion in scenes. This works great for more epic or emotional style videos. But it can be difficult to achieve more energetic, fast-paced movement even with the motion controls.

- It's hard to create some specific camera angles

- It was hard to recreate specific camera perspectives like the POV of the rock flying through space. You may need to be open to new angles if you can't get the exact framing you want.

- Objects that are not common, or in the training library for the AI, will be harder to generate

- For some reason, Runway struggled with generating accurate Saturn rings in multiple images. This shows certain elements may be outside the current training and knowledge of the AI. I'd recommend using reference images as inputs, rather than specifying "Saturn".

- While not 100% qualified - it can speed up processes like Previz

- The quality isn't quite ready to fully replace professional video production, especially high quality ads. But the fact that we got a pretty decent video with a few hours of typing on a computer, is pretty amazing.

Alternatives to Runway

- Wombo - Generates short video clips from audio files and prompts. More music video focused.

- Simon - Converts text to speech and generates basic animated videos. Better for explainers.

- D-ID - Creates talking head videos from images. Good for TESTIMONIAL style videos.

- Synthesia - Converts text and images into lifelike synthetic videos. More manual customization.

Next Steps with Runway

- Practice creating a wide range of video styles - from epic trailers to product explainers and more.

- Combine Runway with live video templates to add talking heads or screenshots to your AI footage.

- Use Runway clips in more complex video compositing programs like After Effects.

Comments